Home » Single publication

Deep hybrid order-independent transparency

G. Tsopouridis, I. Fudos, Andreas-Alexandros Vasilakis

CGI 2022, available in The Visual Computer, July 1, 2022, to appear

Abstract

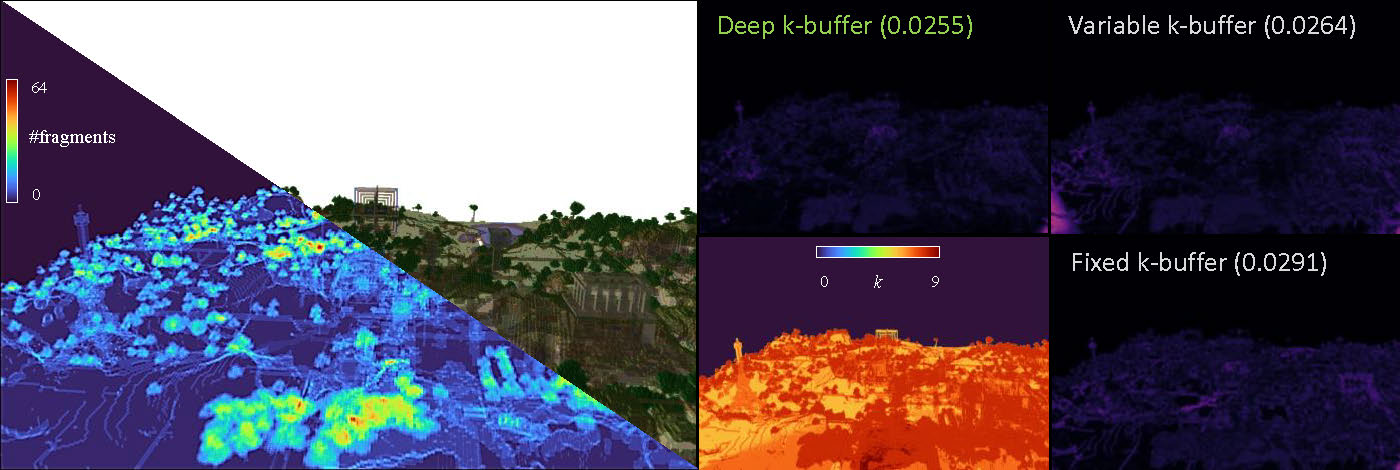

Correctly compositing transparent fragments is an important and long-standing open problem in real-time computer graphics. Multifragment rendering is considered a key solution to providing high-quality order-independent transparency at interactive frame rates. To achieve that, practical implementations severely constrain the overall memory budget by adopting bounded fragment configurations such as the k-buffer. Relying on an iterative trial-and-error procedure, however, where the value of k is manually configured per case scenario, can inevitably result in bad memory utilization and view-dependent artifacts. To this end, we introduce a novel intelligent k-buffer approach that performs a non-uniform per pixel fragment allocation guided by a deep learning prediction mechanism. A hybrid scheme is further employed to facilitate the approximate blending of non-significant (remaining) fragments and thus contribute to a better overall final color estimation. An experimental evaluation substantiates that our method outperforms previous approaches when evaluating transparency in various high depth-complexity scenes.

@Article{Tsopouridis2022,

author={Tsopouridis, Grigoris

and Fudos, Ioannis

and Vasilakis, Andreas-Alexandros},

title={Deep hybrid order-independent transparency},

journal={The Visual Computer},

year={2022},

month={Jul},

day={01},

abstract={Correctly compositing transparent fragments is an important and long-standing open problem in real-time computer graphics. Multifragment rendering is considered a key solution to providing high-quality order-independent transparency at interactive frame rates. To achieve that, practical implementations severely constrain the overall memory budget by adopting bounded fragment configurations such as the k-buffer. Relying on an iterative trial-and-error procedure, however, where the value of k is manually configured per case scenario, can inevitably result in bad memory utilization and view-dependent artifacts. To this end, we introduce a novel intelligent k-buffer approach that performs a non-uniform per pixel fragment allocation guided by a deep learning prediction mechanism. A hybrid scheme is further employed to facilitate the approximate blending of non-significant (remaining) fragments and thus contribute to a better overall final color estimation. An experimental evaluation substantiates that our method outperforms previous approaches when evaluating transparency in various high-depth-complexity scenes.},

issn={1432-2315},

doi={10.1007/s00371-022-02562-7},

url={https://doi.org/10.1007/s00371-022-02562-7}

}

author={Tsopouridis, Grigoris

and Fudos, Ioannis

and Vasilakis, Andreas-Alexandros},

title={Deep hybrid order-independent transparency},

journal={The Visual Computer},

year={2022},

month={Jul},

day={01},

abstract={Correctly compositing transparent fragments is an important and long-standing open problem in real-time computer graphics. Multifragment rendering is considered a key solution to providing high-quality order-independent transparency at interactive frame rates. To achieve that, practical implementations severely constrain the overall memory budget by adopting bounded fragment configurations such as the k-buffer. Relying on an iterative trial-and-error procedure, however, where the value of k is manually configured per case scenario, can inevitably result in bad memory utilization and view-dependent artifacts. To this end, we introduce a novel intelligent k-buffer approach that performs a non-uniform per pixel fragment allocation guided by a deep learning prediction mechanism. A hybrid scheme is further employed to facilitate the approximate blending of non-significant (remaining) fragments and thus contribute to a better overall final color estimation. An experimental evaluation substantiates that our method outperforms previous approaches when evaluating transparency in various high-depth-complexity scenes.},

issn={1432-2315},

doi={10.1007/s00371-022-02562-7},

url={https://doi.org/10.1007/s00371-022-02562-7}

}

close